You can install a few key strategies to block bots and boost site performance. First, consider using a CDN, like Cloudflare. It can improve site speed and reliability. It also blocks unwanted bot traffic.

Also, create a robots.txt file. It will instruct which bots can crawl your site. This will help you manage and block bots traffic. Finally, use a web application firewall (WAF) to boost protection against malicious bots.

Remember to double-check your configurations and settings to ensure they are effective.

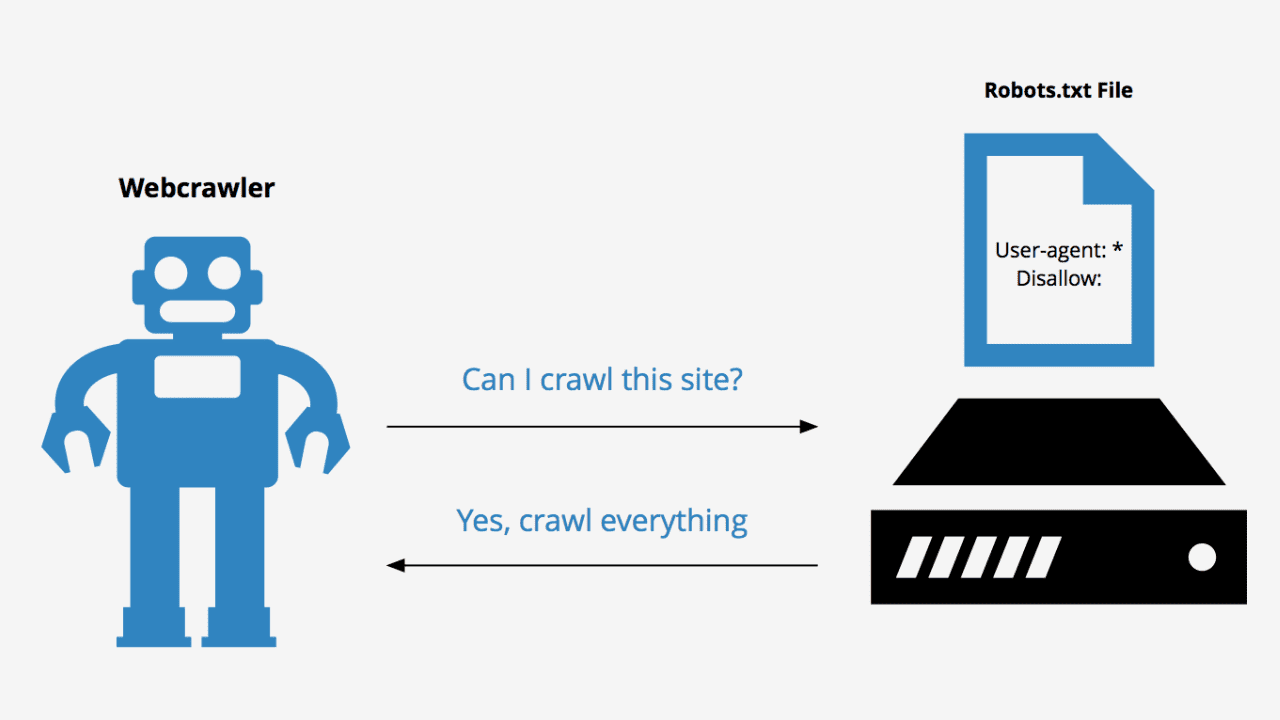

What is a robots.txt file, and how does it work?

A robots.txt file tells search engine crawlers which URLs the crawler can access on your site. Prevent site overload by limiting requests; Google indexing is not affected. To keep a web page out of Google, block indexing with no index or password to protect the page.

With a CMS like Wix or Blogger, there are limitations on editing robots.txt. Instead, your CMS might have a search settings page. It may let you specify whether search engines can crawl your page.

Hide or unhide a page from search engines, and check your CMS’s instructions on page visibility. For example, search for “Wix hide the page from search engines.”

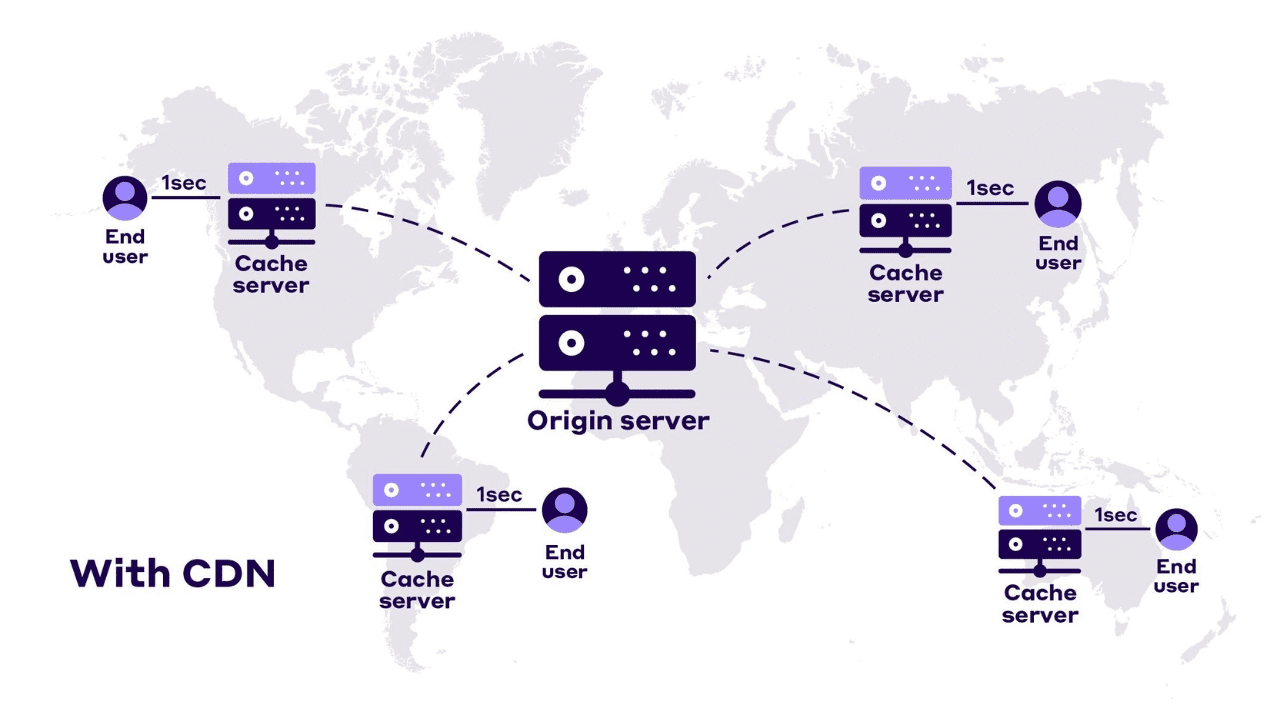

How does a CDN improve website performance and block bots?

A content delivery network (CDN) is a group of servers. They have dispersed to various locations. The servers cache content near end users.

A CDN accelerates the delivery of web content’s required assets. These include HTML pages, JavaScript files, stylesheets, images, and videos.

CDN services are reliable. Now, most web traffic comes from CDNs. This includes major sites like Facebook, Netflix, and Amazon.

A configured content delivery network defends websites against denial-of-service threats.

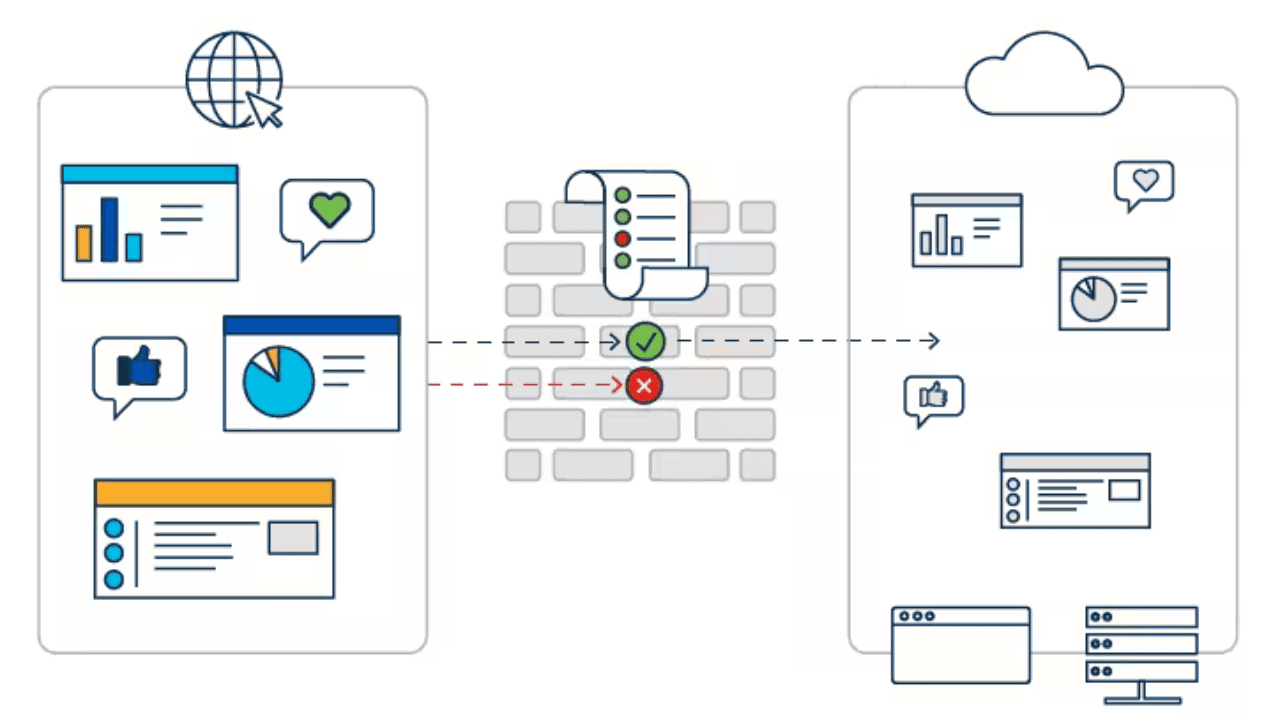

What are the benefits of using a web application firewall (WAF) to block bots?

A WAF, or web application firewall, protects web apps. It filters and monitors HTTP traffic between the apps and the internet. It protects web apps from attacks, like cross-site forgery and XSS. As well as file inclusion and SQL injection.

A WAF is a protocol layer 7 defense (in the OSI model), and is not designed to defend against all types of attacks. This method of attack mitigation is usually part of a suite of tools. That together creates a holistic defense against a range of attack vectors.

By deploying a WAF in front of a web application. A shield is placed between the web application and the Internet. A proxy server protects a client machine’s identity by using an intermediary.

A WAF is a reverse proxy that protects the server from exposure. Clients pass through the WAF before reaching the server.

A WAF operates through a set of rules often called policies. These policies aim to protect against vulnerabilities in the application. By finding and Filtering out malicious traffic.

The value of a WAF comes in part from the speed and ease. where policy modification can implemented. allowing for faster response to varying attack vectors during a DDoS attack. Rate limiting can implemented by modifying WAF policies.

Conclusion:

To prevent bots and improve site performance, a multi-pronged approach is needed. Get a CDN like Cloudflare to enhance content delivery and block unnecessary bots. Use a robots.txt file to manage crawler access and reduce server strain without hurting SEO.

Install a WAF to defend against attacks by controlling traffic through defined policies. These steps increase site efficiency and provide accurate measures against malicious bots. Frequent configuration checks are vital to maintain usefulness.

Frequently Asked Questions

Block fake traffic by identifying unusual patterns, using firewalls, and configuring your analytics tools to exclude known bot IP addresses.

Use anti-spam plugins, CAPTCHAs, and firewalls to filter out and block spam traffic to your website.

Bots hit websites for various reasons, including data scraping, spam, SEO manipulation, and brute-force attacks.

To stop bots from crawling your site, use the robots.txt file to disallow specific user agents and implement CAPTCHAs or challenge-response tests.

Websites block bots using CAPTCHAs, rate limiting, firewalls, and anti-bot solutions that detect and filter out bot traffic.